Still worried about data leaks when building AI automation? You’re not alone. Large Language Models (LLMs) offer incredible power to streamline tasks, but they also bring new security and compliance headaches. This guide will show you how to confidently build secure LLM applications that meet enterprise standards, helping your business save time and reduce risk.

Why Security Is the Biggest Barrier to LLM Adoption

Enterprises are eager to use LLMs to automate tasks and improve workflows. However, many hit a wall when it comes to security. The potential for missteps is real, and the consequences can be severe.

Trust, Risk, and Enterprise Readiness

Before any new technology gets widely adopted, businesses need to trust it. For LLMs, this means addressing concerns around data handling, model behavior, and overall system integrity. Without a strong security foundation, the perceived risks outweigh the benefits, slowing down progress for AI automation. At Growth Design Studio, our problem-first approach ensures that security considerations are integrated into the practical automation design from the initial stages, building trust and accelerating AI adoption.

Regulatory Pressure Across Industries

From finance to healthcare, regulations demand strict data protection. When you build LLM apps, you’re not just dealing with internal policies; you’re also facing external compliance requirements. Failing to meet these standards can lead to hefty fines and damage to your reputation.

For more on deploying LLMs in production securely, check our best practices guide.

Key Security Risks in LLM Automation

Understanding the threats is the first step to building robust LLM systems. These aren’t just theoretical; they’re practical challenges when you use automation with AI.

Data Leakage and Prompt Injection

One major concern is data leakage. LLMs, by their nature, process a lot of information. Without proper controls, sensitive company data or customer information could accidentally appear in model outputs. Prompt injection attacks, where malicious inputs manipulate the LLM, are another threat, forcing the model to reveal confidential data or perform unintended actions.

Model Misuse and Unintended Actions

An LLM might generate inappropriate content, provide incorrect advice, or even trigger actions it shouldn’t. This isn’t always malicious; sometimes it’s a result of an improperly constrained model or unforeseen user interaction. You need safeguards to prevent these unintended actions and keep your operations running smoothly.

Third-Party API Risks

Many LLM apps connect to external APIs to get data or perform actions. Each external connection introduces a new point of vulnerability. For example, if you build LLM apps with tools like n8n for orchestration, you need to ensure every API connection is secure and properly authenticated to prevent unauthorized access or data breaches.

Growth Design Studio specializes in custom API integrations and end-to-end workflow orchestration using n8n, ensuring that every external connection is fortified with best practices for authentication and data security.

Learn beginner-friendly steps in our guide on how to build LLM apps.

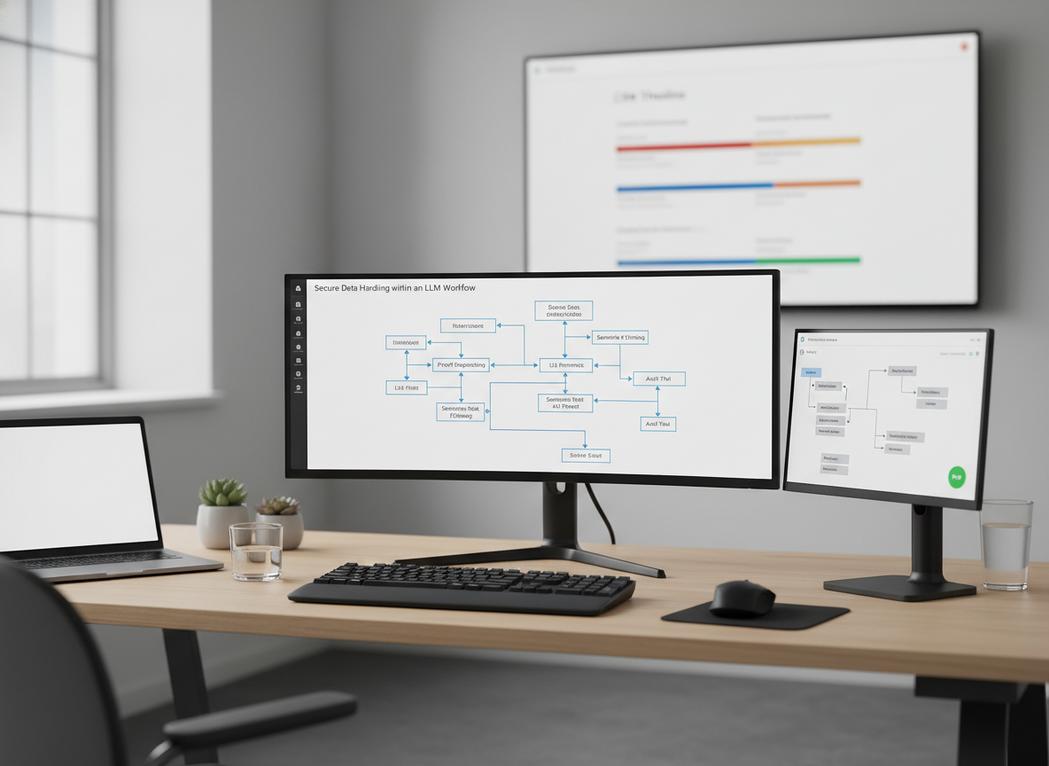

Data Privacy, Governance, and Securing LLM Workflows

Handling data correctly is paramount when working with AI automation. This means thinking about where data lives, how long it stays there, and who can see it.

Handling Sensitive and PII Data

Any LLM system that processes Personally Identifiable Information (PII) or other sensitive data requires careful design. You need to implement strong encryption, data masking, and access controls. The goal is to minimize the exposure of sensitive data while still allowing the LLM to perform its intended functions.

Growth Design Studio builds solutions using secure, scalable automation frameworks with best practices for data handling, specifically addressing the challenges of PII and sensitive information.

Data Residency and Retention

Where your data is stored matters, especially for compliance. Some regulations require data to reside in specific geographic locations. You also need clear policies on how long data is retained, both for inputs and outputs from your LLM workflows. For instance, data used to “build LLM apps” and train them needs careful management.

India-Specific Compliance Considerations

In countries like India, data protection laws are becoming stricter. Enterprises building LLM applications must align with local regulations, which often include requirements for data localization and consent management. Ignoring these regional specifics can create significant legal risks.

Input and Output Validation

Before an LLM processes any input, validate it. Check for malicious code, unusual patterns, or attempts at prompt injection. Similarly, validate the output from the LLM before it’s used or displayed. This acts as a crucial barrier against both accidental errors and deliberate attacks.

Role-Based Access Controls

Not everyone needs the same level of access to your LLM systems. Implement role-based access controls (RBAC) to ensure that only authorized personnel can configure, deploy, or monitor your LLM apps. This limits the “blast radius” if a user account is compromised.

Tool and API Permissions

When you build LLM apps that integrate with other tools or APIs, define precise permissions. Give these integrations only the access they absolutely need, and nothing more. For example, if your LLM connects to a CRM, it should only have permission to read and write specific data fields, not access the entire database. Growth Design Studio’s expertise in custom API integrations and robust workflow orchestration ensures that all tool and API permissions are meticulously defined and adhered to, minimizing risk.

Model Governance, Compliance, and Building Trustworthy LLM Systems

You need clear ways to track, manage, and understand how your LLMs are operating. This transparency is key to maintaining trust and proving compliance.

Prompt and Model Version Control

Just like code, prompts and models need version control. This lets you track changes, revert to previous versions if needed, and understand how different iterations impact performance and security. When you “build LLM apps,” documenting prompt changes is as important as documenting code changes.

Growth Design Studio designs solutions to be modular and auditable, facilitating comprehensive version control for prompts and models. Consider how to choose the right LLM for your automation needs to ensure compatibility with security protocols.

Explainability and Logging

You should be able to understand why an LLM made a particular decision or generated a specific output. Implement logging for all interactions, inputs, and outputs. This audit trail is invaluable for debugging, identifying security incidents, and demonstrating compliance to regulators.

Incident Response Planning

Even with the best security, incidents can happen. Have a clear plan for what to do if there’s a data breach, a model misuse event, or any other security incident. A swift and organized response can minimize damage and restore confidence.

ISO, SOC 2, and Enterprise Policies

Many enterprises already adhere to standards like ISO 27001 or SOC 2. Your LLM deployments need to fit within these existing frameworks. This means documenting your security controls, conducting regular audits, and ensuring your LLM apps meet the same rigorous standards as the rest of your IT infrastructure.

Aligning with Internal Risk Teams

Don’t go it alone. Work closely with your internal risk and compliance teams from the start. They can provide valuable guidance on existing policies, identify potential gaps, and help you navigate the complex landscape of enterprise security.

Human Oversight and Approvals

While LLMs automate tasks, human oversight remains crucial. Implement workflows that include human review and approval for critical decisions or sensitive outputs. This adds a layer of safety and ensures that the automation always aligns with business goals.

Continuous Risk Assessment

Security isn’t a one-time setup; it’s an ongoing process. Regularly assess new threats, update your security measures, and adapt to the evolving capabilities of LLMs. This proactive approach helps you stay ahead of potential vulnerabilities.

Explore how AI automation compares to traditional methods to better integrate security in your workflows.

Frequently Asked Questions

What are the main security risks in enterprise LLM automation?

Key risks include data leakage, prompt injection attacks, model misuse, and vulnerabilities from third-party APIs. Implementing validation, access controls, and encryption can mitigate these threats effectively.

How can enterprises ensure compliance when building LLM apps?

Align with standards like ISO 27001 and SOC 2, manage data residency, handle PII securely, and conduct regular audits. Collaborating with internal risk teams is essential for regulatory adherence.

What role does governance play in LLM security?

Model governance involves version control for prompts and models, detailed logging for auditability, and incident response planning. These practices build trust and ensure explainability in LLM operations.

Why is input validation important for LLM workflows?

Input validation detects malicious patterns or injection attempts before processing, while output validation prevents unintended disclosures. This dual layer protects against both accidental and deliberate security breaches.

From Experimentation to Enterprise Trust

AI automation offers massive potential, but securing your LLM applications is not optional for enterprises. By focusing on robust security practices, data privacy, and clear governance, you can move confidently from experimentation to deploying trustworthy, impactful LLM systems that save time and drive real results. This helps your team use AI automation: build LLM apps that truly enhance your business.

Growth Design Studio is dedicated to helping small and mid-sized businesses navigate these complexities, providing fast implementation and focusing on real business outcomes for secure and reliable AI automation.

Want secure, compliant LLM automation built for your enterprise? Book your free automation audit.